- How come you have applied the best strategies to make the best decision, yet the solution you arrived at did not stand against the expectations?

- How come you have spent so much time on analyzing the data, but the solution did not bring the happiness you were after?

- How come you have dissected the problem so well, yet the solution was only mediocre?

How I made a rational decision

Ten years ago I was buying my first laptop ever for work and I could freely choose the one I wanted. Laptops were very expensive then so I wanted to make the best decision possible.

What did I do?

I took it very seriously. I spent a whole month studying information on the Internet about available laptops. Although I defined a few important characteristics, it still left me with more than 150 possible laptops. I was studying reviews, people who commented on user’s experience, and multiple indices and statistics.

In meantime, my colleagues made their choices. It took them perhaps 1-2h to decide for particular Asus laptops. Then they were teasing me on my being stuck at multi-objective optimization. They had lots of fun at my decision making process.

Finally, I arrived at a perfect laptop, the best combination of memory, hard disc, video graphics, speed of processor, touch pad etc. To my surprise, happiness did not follow. Despite my lengthy ration decision making process, the laptop didn’t serve me well. It turned out into a horrible experience.

It was a laptop that had never worked. I mean, it worked with simple tasks, but most of the time there was something wrong with the touchpad, mouse or keyboard that prevented me from working smoothly. It contributed to months of frustration.

The laptop went through multiple repairs until I lost patience and abandoned it completely. I was crushed, especially that the other Asus laptops worked like charm.

I learned my lesson then. With gratitude.

Th next time when I was buying a laptop, I gave myself a few hours to make a decision. Most of that time was devoted to learning about possible options and laptop characteristics. The decision itself took a split of a second.

First, I decided to buy Asus. Next, I asked my colleagues for suggestions (read: learn what experts say) about current best features to optimize for the tasks I would need to perform. Finally, I studied available Asus laptops and made a choice which was the most appealing. I simply chose a laptop that felt right. In addition, I mentally decided to make it work for me. And it was a great buy indeed. It worked like a charm, too.

What’s the point of this story?

Rational decision making

In general, there are three approaches to decision making: rational, emotional and intuitive (or any combination of these if processed sequentially). In the rational decision making scenario you:

- Define the case and the decision to be made.

- Identify the features (characteristics) of the problem.

- Identify the criteria for the result.

- Analyze all possible solutions.

- Predict goodness-of-fit = calculate consequences of the solutions based on their ability /likelihood of fulfilling the criteria.

- Choose the best option.

It sounds pretty straightforward, but it is not. Why? Because rational decision making relies on a few strong assumptions:

- All options are possible to consider.

- There are descriptive features for the task.

- There is a clear way (function) to evaluate the future consequences of the features.

- There is a well-defined criterion for the result.

- There is one best outcome.

In reality these pre-suppositions often do not hold. First, it may be hard to choose a few important features and a performance measure as a function of the features. Even if you define the most important features, you may also include others weighed appropriately to reflect their importance. Secondly, it may be impossible to evaluate all options. Next, it may be hard to judge the goodness of the result from an external point of view. Finally, there may be multiple solutions.

The challenge of the rational approach is there because we hardly ever have well defined features and a performance (or error) measure available for optimization. What we usually encounter are situations of multi-objective optimization. This means that we have a few criteria or error measures that we want to optimize simultaneously. Since the criteria are often interconnected, one needs to pre-define either a linear or nonlinear trade-off. And this is hard if not impossible beforehand.

To make things worse, we are not always sure whether some of the features need to be considered as part of the criterion-hood 😉 If you are in the field of multi-objective optimization then you know that this is not a straightforward task. The solution lies somewhere on the so-called Pareto front, which is a surface of multiple solutions.

Consider this scenario

Imagine you want to buy a laptop. If your only criterion is cost then you can optimize it easily by buying the cheapest laptop available. Yet, this is not what you want.

Say, you are a programmer/researcher and you want a laptop with a fast processor and a large memory. Moreover, it has to be light because you want to carry it around while running memory-exhaustive applications. But you are also picky about some user-experience features.

You have strong opinions about keyboards and you only like the ones with a certain softness. Moreover, you hate flashy buttons and you would like to a laptop with plain keyboard only. You want to buy the cheapest laptop under the constraints mentioned above. Some can be quantified by numerical characteristics (memory size) while others may not (softness of the keyboard).

If you are given an upper limit of money, you may decide to sacrifice hard disc space for a large RAM memory. What also happens is that some of the features (such as large RAM and big hard disc) may be put into the goodness-of-fit criterion in which you will be looking for a trade-off between cost and the size of memory and storage.

What should be a trade-off?

You will usually not define it explicitly but analyze a number of options usually hesitating between a few. At that point, the solutions will differ by different nonlinear trade-offs of the individual features. Making a rational choice would require the definition of the optimal trade-off function, which is often impossible.

Consequently, you will either analyze the few choices endlessly to collect more outside evidence (extra features that will enter the equation), such as reviews and opinions or you will make a decision by emphasizing which feature is the most important (say, cost).

However, you may also follow a different route by fixing the laptop’s weight to a certain range (as a feature) and then define a good trade-off between the sizes of memory and hard disc.

Do you see what I mean? There is an inherent difficulty to define well-descriptive features, their importance weights or the goodness-of-fit measure.

The curse of dimensionality

Let’s look at the features now. In the field of statistical learning there is a famous phenomenon called “the curse of dimensionality”, “overtraining” or “Rao’s paradox”. I will explain it below because it has an impact on our decision making process.

Originally, we may assume that the more details (features) of the case we collect, the better the description of the situation we have at hand. The more details, the better quality of the description and the more informative decision.

Originally, we may assume that the more details (features) of the case we collect, the better the description of the situation we have at hand. The more details, the better quality of the description and the more informative decision.

Counter-intuitively, it is is not valid.

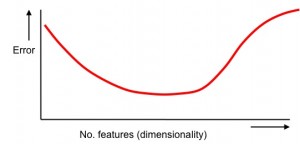

Let’s consider the traditional statistical learning. If you take a single model and keep adding features (details) to see how well your model is doing according to a chosen error measure or performance criterion you will observe a very particular behavior.

If you keep the number of observations (say laptops to consider, people, etc) fixed but you add more and more features (details), the error will originally decrease, only to steadily increase after a while. See the image to the right. If we look at a goodness-of-fit function, it will behave the other way around. It will originally increase with the increasing number of features and steadily decrease after a while. This happens after a sufficient number of features is reached.

What we are saying here is this:

There is a certain point after which adding new details becomes useless, and moreover, they may work against your model (error measure or goodness-of-fit function). You are going to get worse results.

Incredible, isn’t it?

Human decision making

Consider now human decision making.

There is a consultant who looks at symptoms of a difficult cancer case and has to make a decision whether to send the patient to an operation or radiotherapy. There is a company CEO who analyzes multiple indices of the company performance (sales, cash-flow, marketing strategies etc) and has to decide about the next strategy of growth. And there is you to choose your laptop, your university, your holiday destination, your next job, your house, your wife and so on.

Well if you take the same analogy as above to a human decision making based on the data, we may conclude that the quality (performance) of the decision will initially increase up to a few features (pieces of information) and deteriorate if you start adding more features. Or in terms of errors, the error of the decision will initially decrease up to a few features and increase if you keep including more.

Our rational mind is unable to juggle more than 3-7 pieces of information in a given moment.

We cannot possibly weight say 20 features and optimize trade-offs between multiple criteria in our minds to get the best decision. The reason is simple. There are too many variables of different kinds and too complex models (non-descriptive features, nonlinear relations between features, multiple or nested criteria, vague goodness-of-fit function, etc) and the optimum is simply not uniquely defined. The optimum is not a point but merely a surface of possible solutions for various trade-offs.

And you lack a meta criterion to tell you which trade-off to emphasize.

Rational analysis: a brief how-to

The learning point is as follows. If you want to stick to a rational decision making, constrain your problem and the solution path sufficiently:

- Select a few well-defined features (characteristics, descriptions), ideally not more than seven.

- Choose a simple criterion.

- Choose a simple goodness function or performance measure.

For instance, you may consider a case with “yes”/”no” decisions described by a number of binary features (“yes”/”no”) and a simple goodness-of-fit function calculated by a weighted average of feature contributions. Or you may have a complex model (in your working brain) but you only rely on a few well-defined features. This will work well.

In the cases above you can define optimal decisions given the limited framework. In many other cases, however, your rational decision is suboptimal because you cannot define your necessary features and criteria well. Moreover, your suboptimally best decision will likely be not the best because you have missed a feature or modification that you were unaware of at the time of decision making but which will largely influence the situation.

In addition, too much data and too much information will inhibit your decision making. You may simply get stalled in a surface of a few possible solutions which optimize the features and criteria in different ways. And you will not know how to choose a meta goodness-of-fit function to select one and only best solution. So, you will hesitate or wait for ages until you get tired and pick an arbitrary solution from the permitted ones.

So, what is the best approach for complex situations?

Is it emotional decision making???

Addendum

This is added by my friend Bob.

This is added by my friend Bob.

It is fascinating that computers as well as humans suffer from the “curse of dimensionality”, or confusion by details. This phenomenon was objectively measured in many experiments. The results of an early one, from the 70’s, is shown in the image to the right. It is an old scan of an old paper, so please forgive the quality. It shows that the accuracy of the diagnosis of a group of 100 medical experts first increases and then decreases as a function of the number of medical tests (symptoms) that are being considered. This points to the fact that in a large medical examination always some problem may be found. May be there is nothing wrong in it, just the result of statistics!

***

Photo courtesy Jamie Frith, available under the Creative Commons license on Flickr.

***